Predicting the internal world representations – the abstract state of the world that matters most for the world models and object-driven AI, which operate at the next level of intelligence.

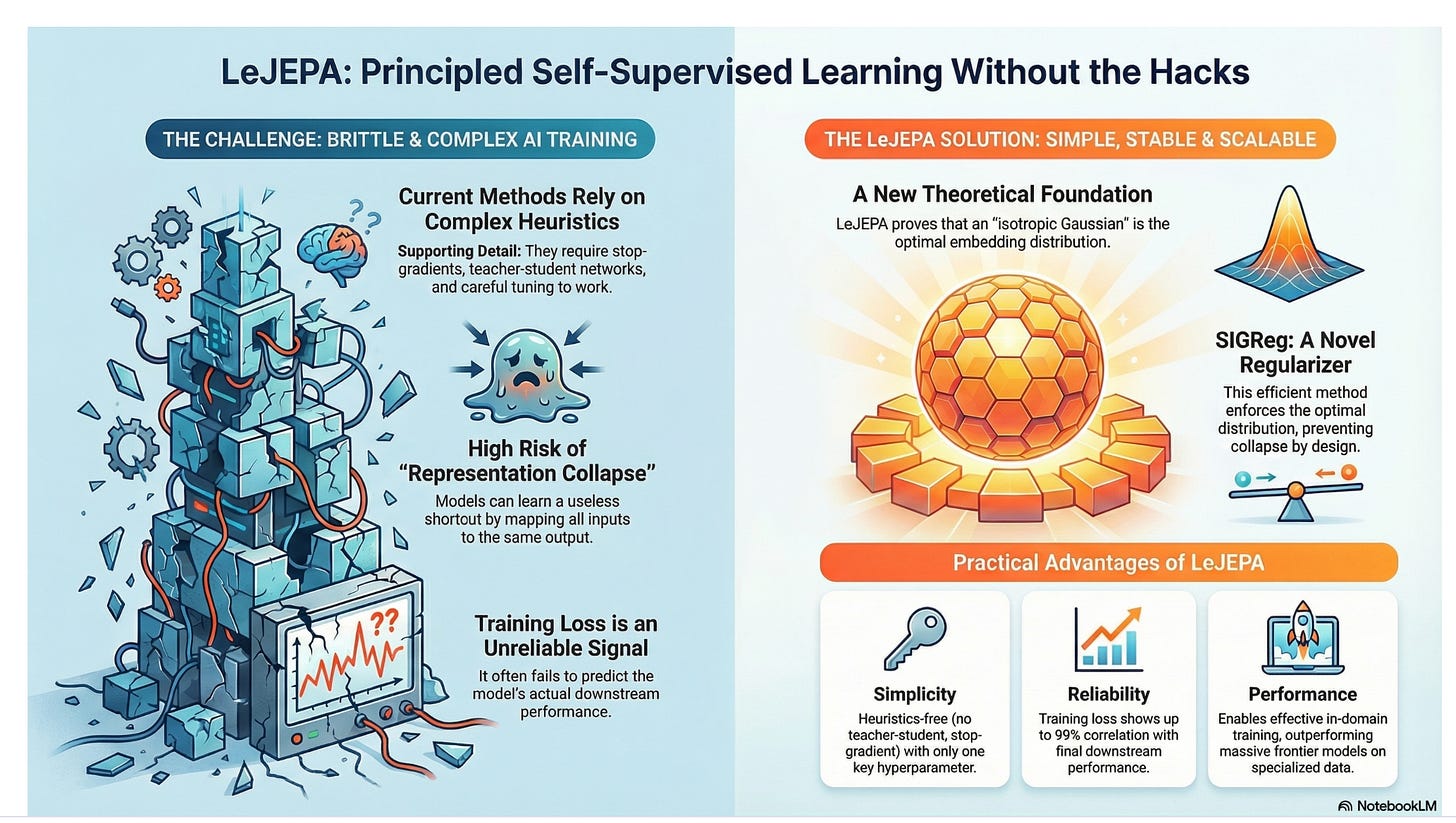

Introduction: From Dark Arts to Principled Design

For years, training advanced Self-Supervised Learning (SSL) models has felt more like an art than a science. Researchers have often found themselves in a frustrating “game of Whac-A-Mole,” wrestling with complex, brittle systems that require a “delicate balance of hyperparameters” and a host of ad-hoc heuristics to work correctly. This complexity has made state-of-the-art AI development a slow, expensive, and often inaccessible process.

A new paper from Meta AI researchers Randall Balestriero and Yann LeCun, titled “LeJEPA,” introduces a breakthrough that promises to replace this complexity with a lean, scalable, and theoretically-grounded approach. It challenges some of the core assumptions in the field and offers a much simpler path forward. This post distills the four most impactful and counter-intuitive takeaways from this research that could reshape how we build AI.

1. The End of “Heuristic Hacking”: AI Training Gets Radically Simpler

The first major revelation is the dramatic simplification LeJEPA brings to SSL. Current methods, known as Joint-Embedding Predictive Architectures (JEPAs), are plagued by a problem called “representation collapse,” where the model learns a useless shortcut by mapping all inputs to the same output. To fight this, researchers rely on a complicated toolkit of tricks.

To mitigate such shortcut solutions, state-of-the-art recipes rely on heuristics–stop-gradient [...], asymmetric view generation [...], teacher–student networks with carefully tuned EMA schedules [...], explicit normalization and whitening layers–and a delicate balance of hyperparameters. As a result, today’s JEPA training is brittle...

LeJEPA eliminates this entire toolkit by solving the collapse problem “by construction”—that is, its core objective actively forces the model’s representations into a desirable, non-collapsed shape, making the old heuristics unnecessary. The practical benefits are profound:

It is heuristics-free, removing the need for complex and unstable components like stop-gradients and teacher-student architectures.

It has only a single trade-off hyperparameter, making it vastly easier to tune compared to previous methods.

The core implementation requires only about 50 lines of code, making state-of-the-art SSL more accessible to the entire research community.

This radical simplification isn’t just a practical convenience; it’s the direct result of a profound theoretical discovery about the very nature of ideal AI representations.

2. The “Golden Rule” for AI Representations: There’s an Optimal Shape for Knowledge

At the heart of any AI model is its internal representation of the world—a high-dimensional “map” of concepts known as embeddings. A central, unanswered question in AI has been what this map should ideally look like. Researchers have used intuition and empirical guesswork, but LeJEPA provides a formal, provably correct answer.

The paper proves that the single optimal distribution for these embeddings, to minimize errors on any future, unknown task, is the isotropic Gaussian. In simple terms, this means the AI’s internal “map” of concepts should look like a perfectly spherical, uniform cloud of points in its high-dimensional space. Think of it this way: a lopsided, warped map has inherent biases, preferring certain directions over others. A perfectly spherical “map,” however, has no preferred direction. It represents an unbiased foundation, making it maximally adaptable and fair for any future task you throw at it, which is the entire goal of a foundation model. This discovery moves the field from heuristic exploration to a clear, mathematically-defined target.

We establish that the isotropic Gaussian uniquely minimizes downstream prediction risk across broad task families. [...] This theoretical result transforms JEPA design from heuristic exploration to targeted optimization.

By providing a provably correct target for the AI’s internal map, LeJEPA does more than just simplify training—it makes the training process itself transparent and reliable for the first time.

3. A Training Loss You Can Finally Trust

One of the biggest pain points in SSL is that the training loss—the number the model is trying to minimize—often has a low correlation with the model’s actual performance on real-world tasks. This forces researchers to constantly run expensive, time-consuming evaluations using labeled data just to check if the model is learning anything useful. It’s like flying blind.

LeJEPA solves this problem. Its training loss shows an exceptionally high correlation with downstream accuracy. The paper demonstrates a 94.52% Spearman correlation for a ViT-base/8 model on the ImageNet-1k dataset. With a simple scaling law, this correlation can be pushed to nearly 99%.

This is a practical game-changer. It enables label-free model selection and cross-validation, allowing developers to confidently use the training loss to identify the best-performing models without needing any labeled data for evaluation. This drastically reduces the cost and complexity of developing high-quality models.

This newfound reliability and simplicity is not just an incremental improvement. It’s so robust that it enables a completely different approach to model training, one that challenges the “bigger is better” mantra dominating the field.

4. David vs. Goliath: Small, Specialized Training Can Beat Giant AI Models

Perhaps the most counter-intuitive result challenges the dominant “transfer learning” paradigm in AI today. The standard approach is to take a massive “frontier model” and adapt it to a specialized domain, such as medical imaging or astronomy. These models are pre-trained on massive, generic datasets—for instance, DINOv2 was trained on 142 million images and DINOv3 on a staggering 1.7 billion.

LeJEPA’s stability and simplicity unlock a powerful new alternative. The paper shows that LeJEPA can be pre-trained from scratch on a small, domain-specific dataset (like the Galaxy10 dataset with only 11,000 galaxy images) and outperform the giant frontier models on that domain’s tasks. For instance, on the Galaxy10 classification task, in-domain pre-training with LeJEPA consistently beats transfer learning from the much larger DINOv2 and DINOv3 models.

This challenges the transfer learning paradigm and demonstrates that principled SSL can unlock effective in-domain pretraining—previously considered impractical for small datasets.

Conclusion: A New Foundation for AI

LeJEPA is more than just another SSL model; it represents a fundamental shift in philosophy. By replacing brittle heuristics with a single mathematical principle, LeJEPA doesn’t just improve state-of-the-art models—it democratizes access to building them.

As AI development moves from brute-force scaling toward more principled and efficient design, what new scientific and creative domains, previously considered too niche for cutting-edge AI, will be unlocked next?